This guide focuses on configuring the Tailscale Kubernetes operator to expose Kubernetes API servers across multiple clusters for ArgoCD multi-cluster management.

Prerequisites

- Multiple Kubernetes clusters

- Tailscale account with admin access

- Tailscale Kubernetes operator installed in each cluster

Configuring Operator Hostname and API Server Proxy

When installing the Tailscale operator in each cluster, set these critical parameters:

| |

Key parameters:

operatorConfig.hostname: Sets a unique hostname for the operator in your tailnetapiServerProxyConfig.mode=true: Enables Kubernetes API server proxying

Configure each cluster with a unique hostname (e.g., cluster1-k8s-operator, cluster2-k8s-operator).

Create Egress Services in ArgoCD Cluster

Apply the following configuration to create egress services in the ArgoCD cluster:

| |

Replace <TAILNET> with your Tailscale tailnet name.

Configure Tailscale ACL Grants for Cross-Cluster Access

For egress proxies to communicate with Kubernetes API servers exposed by the Tailscale operators, you need to configure appropriate ACL grants in your Tailscale admin console.

Why ACL Grants Are Required

Without proper ACL grants:

- Access to remote Kubernetes API servers will be blocked by Tailscale’s access controls

- Tailscale Egress proxies will be unable to manage resources across clusters

- Cross-cluster API server communication will fail with authentication errors

Configuring ACL Grants

Add the following to your Tailscale ACL configuration:

| |

Key components of this configuration:

"src": ["autogroup:admin", "tag:k8s"]- Specifies who can access the Kubernetes API. Here, it allows admin users and any node tagged withtag:k8s(your ArgoCD cluster)"dst": ["tag:k8s-operator"]- Specifies which Kubernetes operators can be accessed (targets)"impersonate": {"groups": ["system:masters"]}- Grants administrative access to the Kubernetes API"recorder": ["tag:k8s-recorder"]- Optional audit logging configuration"enforceRecorder": false- Makes audit recording optional

This grant enables Tailscale egress proxies (tagged with tag:k8s) to communicate with the Kubernetes API servers exposed by the Tailscale operators in your remote clusters.

Set Up DNS Configuration in ArgoCD Cluster

Why DNS Configuration is Necessary

DNS configuration is a critical component that enables your ArgoCD cluster to resolve Tailnet domain names. Without this configuration:

- Your cluster cannot resolve

*.ts.netdomains that Tailscale uses - Communication between clusters would fail as hostname resolution would not work

- ArgoCD would be unable to connect to remote Kubernetes API servers

The Tailscale DNS nameserver provides resolution for all nodes in your Tailnet, enabling seamless cross-cluster communication through Tailscale’s private network.

Implementation

Create a DNSConfig resource in the ArgoCD cluster:

| |

Find the nameserver IP:

| |

Update CoreDNS configuration to forward Tailscale domain lookups to the Tailscale nameserver:

| |

This configuration tells CoreDNS to forward all ts.net domain resolution requests to the Tailscale nameserver, allowing pods in your cluster to resolve Tailnet hostnames.

Access Remote Clusters

Generate the kubeconfig for each cluster:

| |

Add Clusters to ArgoCD

Add the remote clusters to ArgoCD:

| |

Visual Confirmation

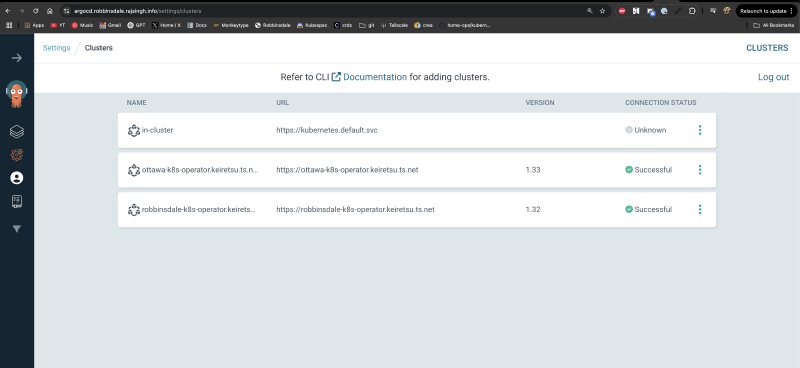

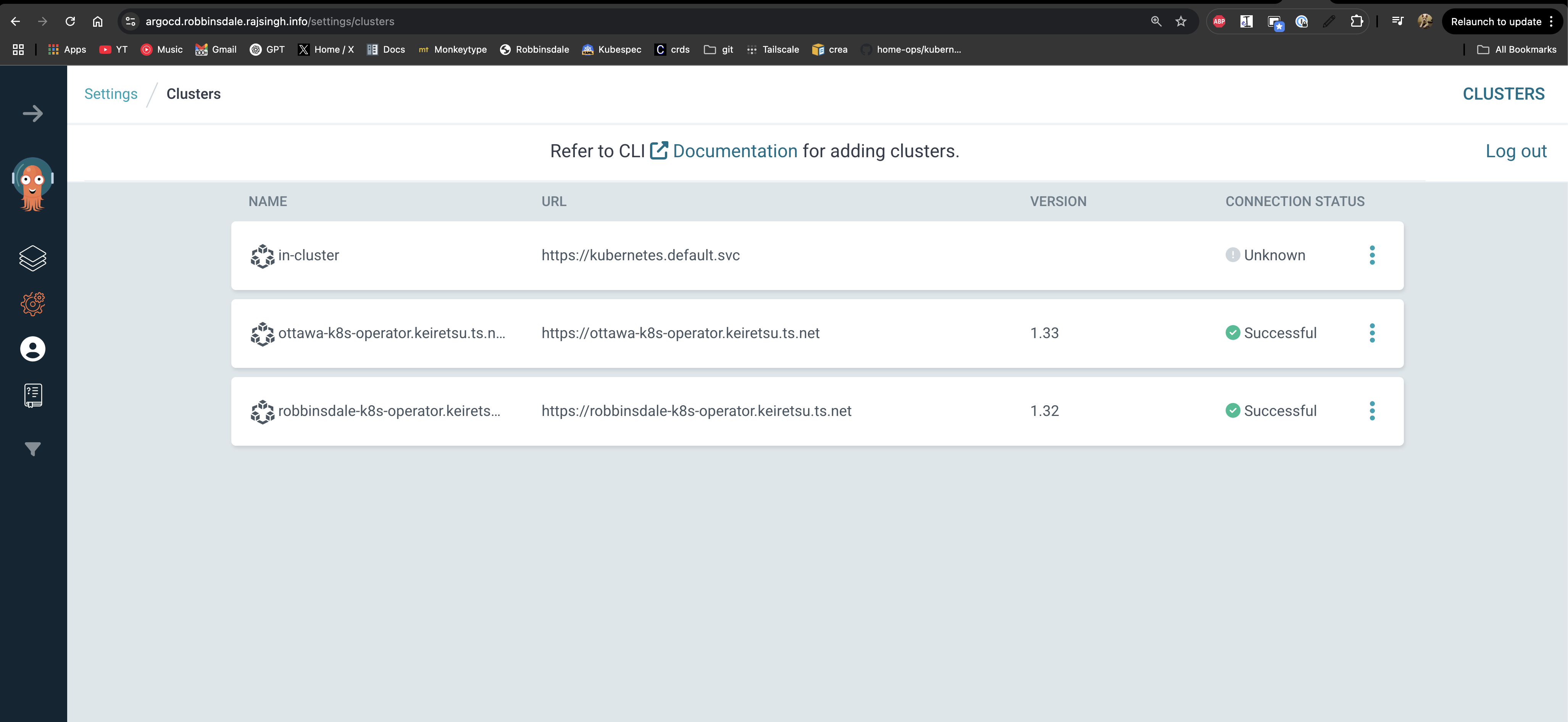

Once configured, ArgoCD will show the clusters as successfully connected within your Tailnet:

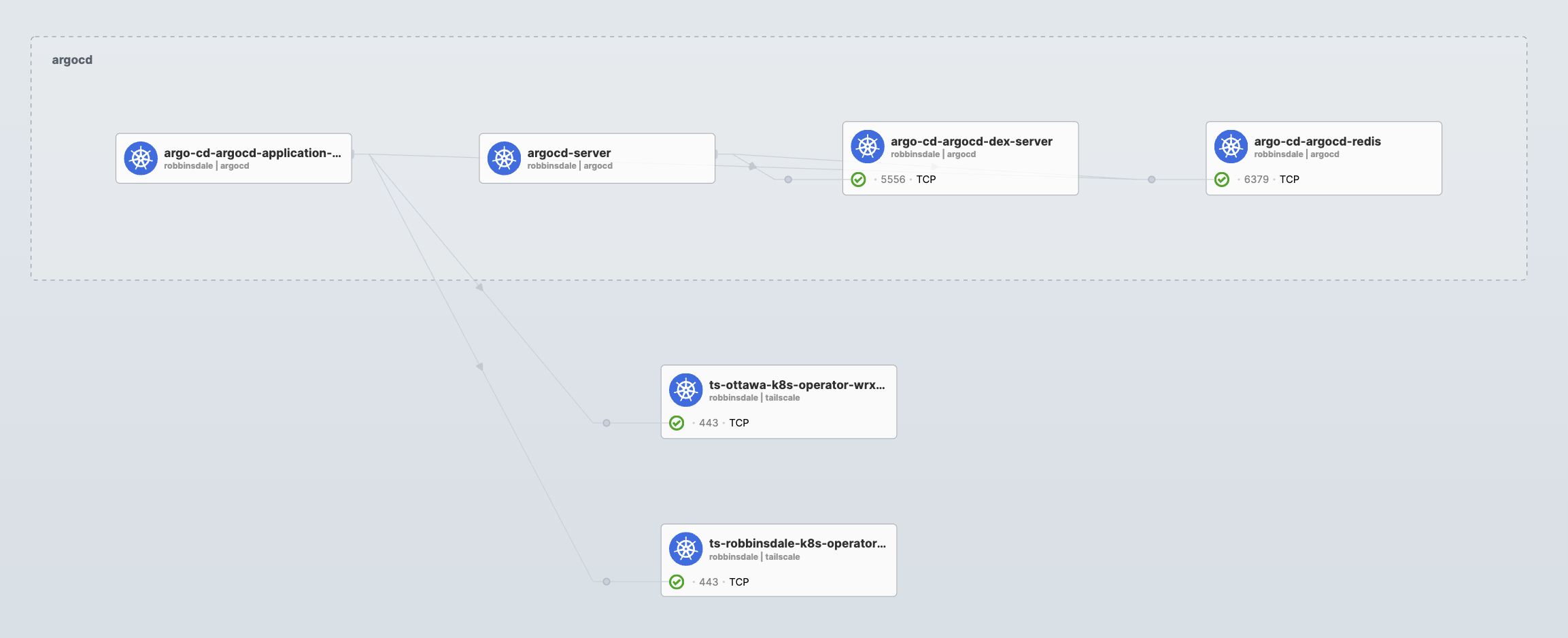

Hubble flows will also demonstrate ArgoCD communicating with the Tailscale egress proxies for each remote cluster: